I’ve compared AI to the “intelligent” horse Clever Hans before—a supercharged Clever Hans that is so uncannily good at simulating uniquely human behavior in unprecedented ways that we’re intuitively baffled when it falls down.

Here’s what to remember: ChatGPT doesn’t understand what it’s saying. Remind yourself of this no matter how convinced you may feel to the contrary. Even though “understanding” is a vague term, I cannot think of any acceptable way to define “understand” that ChatGPT measures up to.

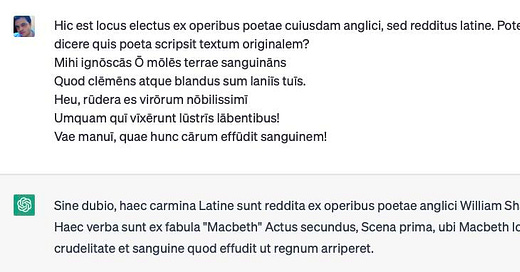

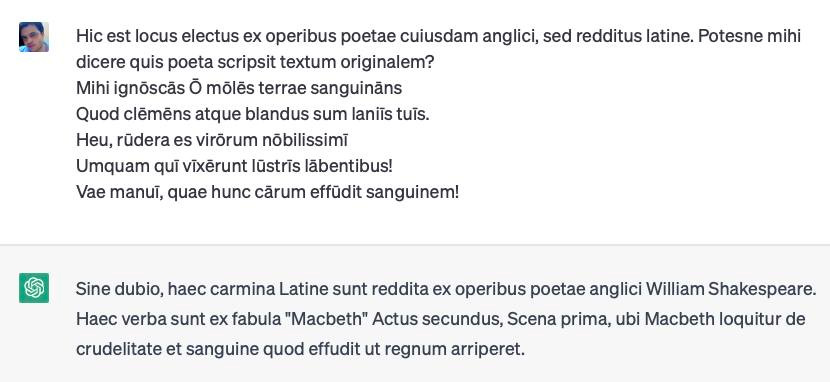

So when master linguist Alex Foreman translated part of Shakespeare’s Julius Caesar into Latin and asked ChatGPT (in Latin) to identify the source, it is amazing that it correctly identified the source writer—and equally amazing that it got the play wrong.

It even provided a specific citation for the original: Act II, Scene 1. But when told that the source was Julius Caesar, ChatGPT could then provide the right original:

Intuitively we want to ask how ChatGPT could possibly know that it was Shakespeare and yet not know the play. Did it have incorrect data? Did it make an error in judgment? Was it bullshitting?

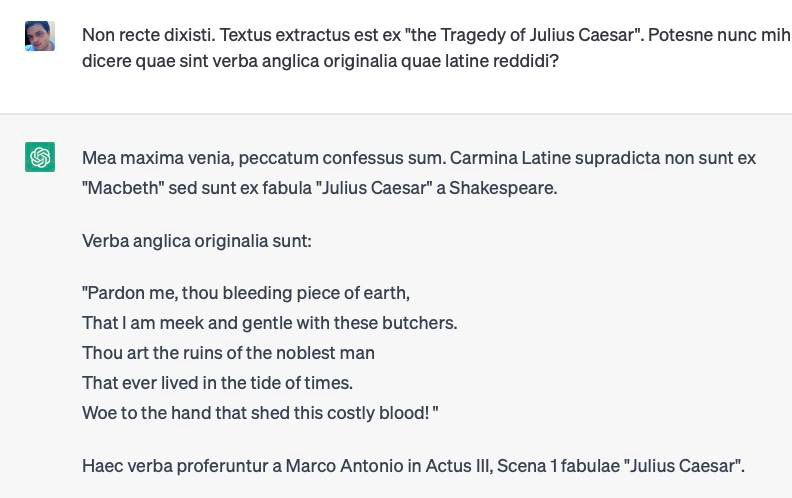

The answer is none of the above. All of those answers anthropomorphize ChatGPT far more than is merited. They all assume some kind of intention and coherency to ChatGPT that it simply doesn’t possess. ChatGPT and its LLM kin are AIs that can claim that the number 5 is not prime in one breath and then say that it is in the next, yet have no notion that they’ve just contradicted themselves, nor even understand what contradiction is.

Broadly speaking, these AIs proceed probabilistically, generating subsequent text based on what’s “suitable” from what went before. Often what’s suitable is true. Frequently it is not. The stunning achievements of deep learning-based LLMs are all the more incredible given that nowhere in their systems do we see any representation of what is “true” or not. Unfortunately, they tempt us into thinking that we are a lot closer to ironing out that wrinkle—the absence of understanding and concepts—than we really are.

That’s not to say that an AI would need to have some explicit symbolic representation of what’s true or not in order to stop making these sorts of mistakes. Human brains don’t. And because AI has developed along very different tracks than any lifeform, it’s hard to judge the exact distance it is from making the leap to some form of actual understanding. But given our human bias toward anthropomorphizing AI behavior, we can be reasonably sure it’s farther away than conventional wisdom currently has it.

That problem of understanding makes many of the hot-button questions less pressing than they feel—or at least, drastically reframes them. If an AI doesn’t know or understand what it’s saying, how can it know or understand ethics? Can an AI without understanding truly align with our values, if it can’t genuinely possess any values? The issue doesn’t become one of education, but sheerly of control.

And the broader control issue truly is pressing, because other achievements in AI—ones that don’t require human-style understanding—may be closer than we think. But framing it in terms of human values is not the way forward. These AIs don’t understand them.

13 Serie ChatGPT, Ride 1 Snake.

7 Miles long,

Come ti può Mangiare in 1 Istante,

Come a Scoprirti in 1 Ansietà,

Come lo antropomorfizzassi più di quanto Merita,

Come 1 Intenzione,

Come 1 Coerenza,

Come 1 Capacità di n Contraddizioni,

Come che semplicemente non Possiede.

Come se non avrai Paura,

As Well As Stay calm,

a n Cavalcioni,

Come Volando,

Come Vedrai,

Come Farai,

n Cose bellissime.

Thanks for explaining it so well. It made me realize how often I anthropomorphize nonsentient items (not just computers, alas).