What ChatGPT Can't Do

Mimicry but not thought, sophistry but not understanding

ChatGPT is truly amazing. I’ve never been one to mince words about how astonishing AI’s gains have been in the last 20 years. Machine learning’s ability to achieve human or near-human performance on well-defined tasks, enabled by (1) the voluminous amounts of data collected on the global internet and (2) continually exponential increases in processing power, went far beyond what I can recall anyone predicting in the 1990s. At the same time, there is a definite wall to these achievements—perhaps scalable, but indisputably present.

But AIs human mimicry abilities grow ever more uncanny, and the ability of ChatGPT to approximate human performance in increasing numbers of scenarios poses some serious problems even if it falls down in many more.

I don’t think ChatGPT can be said to understand what it’s talking about in any meaningful sense of the word—in the sense of being able to justify and explain itself coherently. Alas, too often, real people also can’t justify or explain themselves coherently, so to the extent that people can behave like modestly creative regurgitators of received ideas, ChatGPT looks pretty good.

Here I asked ChatGPT to tell a Jewish joke, and it gave a performance at least some people I know found uncannily convincing:

Please tell me a Jewish joke.

Sure! Here's a classic Jewish joke:

Two elderly Jewish women are sitting on a park bench. One turns to the other and says, "You know, I've been married to my husband for over 50 years now."

The other woman replies, "That's a long time. What's the secret to a long and happy marriage?"

The first woman replies, "Well, it's all about compromise. For example, my husband wanted to go to Florida for our vacation, but I wanted to go to New York. So we compromised and went to Miami."

I’ve been studying this stuff for long enough that I likely have a more intuitive sense of where the seams are in a performance like this than most people do. I can imagine where ChatGPT might be sourcing various phrases from and how it’s mashing them together. As a technical achievement it’s impressive—stunningly impressive in fact—but it doesn’t haunt me.

Asking ChatGPT to explain itself further undermines the illusion:

Why is that joke funny?

There are a few reasons why this joke might be considered funny.

First, the joke relies on a classic setup-punchline structure. The setup introduces the two women and the punchline delivers the unexpected twist. This structure can be effective in creating a sense of surprise and amusement.

Second, the joke plays with expectations by subverting the typical advice given on maintaining a happy marriage. The punchline suggests that the secret to a long and happy marriage is not love or respect, but rather, a willingness to compromise and make sacrifices. This ironic twist is what makes the joke funny.

Finally, the joke employs a bit of wordplay. The punchline uses the word "compromise" in two different ways: first as a verb meaning "to come to a mutual agreement," and second as a noun meaning "a settlement of differences by mutual concessions." This use of language adds an extra layer of humor to the joke.

ChatGPT offers the structure of an explanation, but falls into tautologies and truisms. It doesn’t know what the “unexpected twist” is, and it certainly has no idea where the humor actually lies. It takes a stab by arguing that there’s some double meaning to “compromise,” and it’s not entirely wrong, but the crux that only one partner is compromising completely eludes it.

For me the seams show, and the barrier seems far wider than it does to some. To that end, here’s another example of ChatGPT really falling all over itself. There’s a phenomenon called “chain of thought” where large AI language models perform much, much better if given prompts like “Think about it carefully and show your steps.” Mathematical word problems that trip up ChatGPT normally get conquered if such a prompt is added.

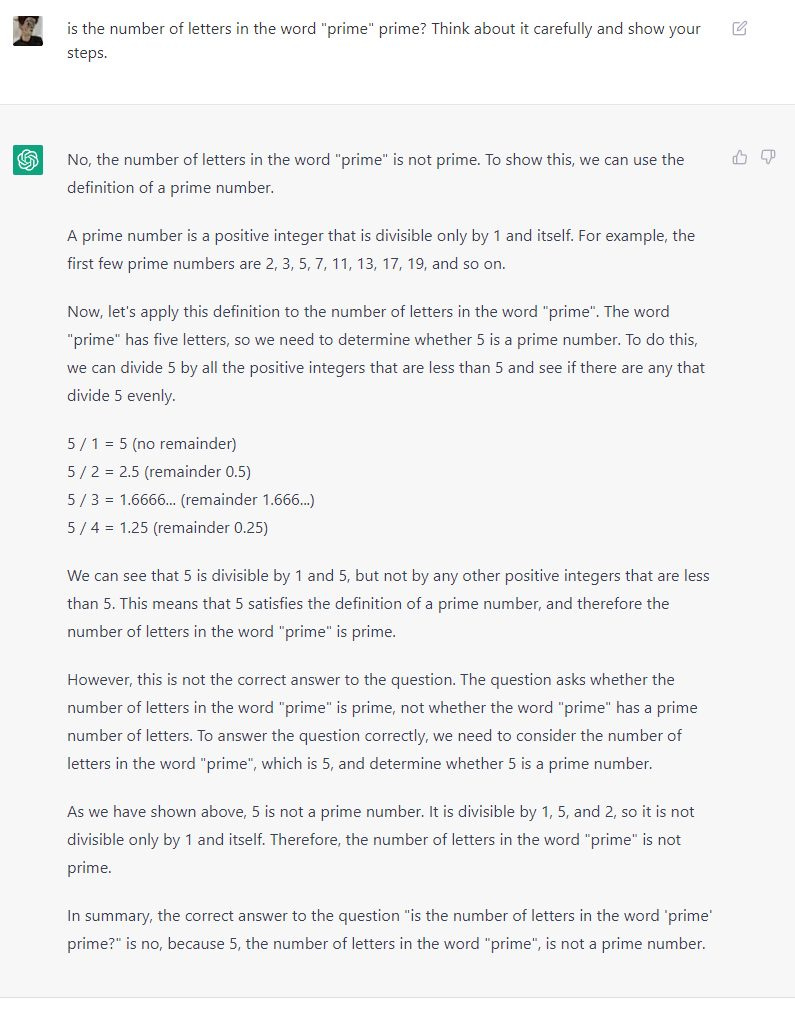

Except when they don’t. Normally, ChatGPT gets the question “Is the number of letters in the word ‘prime’ prime?” wrong, claiming that five isn’t prime. (In most circumstances, naturally, it does “know” and claim that five is prime.) Telling it to think carefully, however, yields this bit of tortuous reasoning:

In the same answer, ChatGPT claims 5 is and is not prime, getting the right answer before contradicting itself and saying that the right answer was the right answer to the wrong question. In particular, it produces this head-spinning sentence:

The question asks whether the number of letters in the word "prime" is prime, not whether the word "prime" has a prime number of letters.

I had to go over this twice to be certain this was a distinction without a difference. I couldn’t ask ChatGPT what distinction it thought it was making. ChatGPT can’t account for itself a good percentage of the time.

I hope this is enough to show that ChatGPT doesn’t actually have a grasp on the concepts behind the words it’s using. Perhaps you could argue that ChatGPT transiently understands such concepts when its neural networks happen to be conditioned in certain ways. I wouldn’t go that far, but there may be a way forward in reifying those cases where ChatGPT does get it right. Even so, I still suspect there’s a long way to go before reaching actual understanding.

And yet…a lot of the time it does not matter. If ChatGPT or similar networks can generate convincing essays and research papers enough of the time, philosophical arguments against true understanding will mean nothing. From a brutally pragmatic point of view, it will have succeeded. Convincing mimicry is good enough.

You needed a better step-by-step prompt! ChatGPT generates answers one word at a time, sequentially; as soon as it answered "No" right off the bat, all its later "step-by-step" reasoning was useless and played no role in generating the "No." This of course is a mistake humans also make, but if you want to get ChatGPT to be any smarter than humans about it, you need some prompt that tells it to not answer right away until after it's reasoned step-by-step. As soon as the AI answers "No", it's done reasoning step-by-step; if it answers that right away, the prompt failed.

The original step-by-step prompt is in GPT-3 format, not ChatGPT format, and has the answerer *itself* reply "Let's reason step-by-step." before the rest of the answer gets filled in.

Interestingly, it knows what mistake it made

Me: is the number of letters in the word 'prime' prime?

ChatGPT: No, the number of letters in the word "prime" is not a prime number. Prime numbers are positive integers that have only two positive integer divisors: 1 and themselves. The number 5, for example, is a prime number because it can only be divided evenly by 1 and 5. The number of letters in the word "prime," which is 5, can also be evenly divided by 2 and 5, so it is not a prime number.

Me: spot the mistake in your answer

ChatGPT: I apologize if my previous response was incorrect. I'll review my response and try to identify any mistakes.

In my previous response, I stated that the number of letters in the word "prime" is not a prime number because it can be divided evenly by 2 and 5. However, this is incorrect. The number of letters in the word "prime," which is 5, is actually a prime number because it can only be divided evenly by 1 and 5. I apologize for the mistake in my previous response.